Rebooting Ubuntu is hard. I don’t really know why, but in my twelve years as an Ubuntu user, I’ve encountered countless “stuck at reboot” scenarios. Somehow, typing reboot always comes with that extra special feeling of uncertainty and the thrill of danger — Will it come back? Where will it get stuck this time? If it’s your home computer or your laptop, that’s fine, because you can always manually hard reset. If it’s a remote computer to which you have IPMI access, it’s a little bit annoying, but not tragic. But if you’re attempting to reboot tens of thousands of devices across the globe, that level of uncertainty is nothing short of terrifying.

I know I’m being unfair, because more often than not, rebooting Ubuntu actually completes successfully. However, my incredibly unscientific estimate of how often things get stuck forever on shutdown or reboot is this: 1-3%. That’s how often I believe reboots hang. That’s shockingly high, right? Well, I pulled that out of my hat, but that estimate is based on many hundred thousands of reboots I’ve witnessed in our fleet of backup devices. That number is not too terrible when you deal with a handful of machines that you rarely ever reboot. It is, however, incredibly terrible if you reboot tens of thousands of devices running Ubuntu every two weeks as part of an upgrade process (I wrote about our image based upgrade mechanism in another post).

This post describes the short story of how we managed to make Ubuntu machines reliably reboot.

Content

1. What’s the problem?

When we first rolled out Image Based Upgrades, we encountered tons of stuck reboots. The first few thousand device reboots were terrifying; so many devices just didn’t come back up. For our customers, that meant driving on-site or using a remote power strip — both of which are annoying and costly.

Either things didn’t even begin the reboot process, like here:

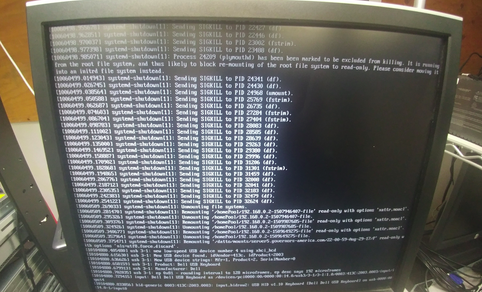

Or they got stuck at random points during the shutdown procedure:

Think about how hard it is to debug shutdown problems like this when you have IPMI access in your own datacenter. Now imagine how hard it is to debug without physical access to the machines, and having to rely on the good grace of your own customers and support team to work with you. We all worked very closely together with our customers, taking hundreds of pictures of hung machines trying to pinpoint the root cause. We spent days trying to reproduce the problems in-house, upgrading devices over and over again many times a day to force the issue. We analyzed ticket after ticket, but in the end we were none the wiser: Ubuntu seemed to have gotten stuck just at random positions in the shutdown process. There was no pattern.

So what now? Give up? Of course not. We didn’t give up. We just merely chose to give up diagnosing the root cause and opted for the nuclear option instead: hard resetting.

2. How about Sysrq?

We first thought about using the Sysrq triggers that the Linux kernel provides to immediately trigger a hard-reset. Instead of calling reboot, we’d call this magical command to instruct the kernel to hard-reset the computer.

|

1 |

echo b > /proc/sysrq-trigger # Trigger hard-reset |

This works wonderfully and actually is a viable option if you are 100% sure that you’ve written everything to disk that you need to, and that all the buffers are flushed to disk. So if you run a read-only Linux, this option may be for you. In all other cases, using just the echo b trigger is not enough, because you run the risk of corrupting random files, your file system, or other in-flight operations.

So what about this then?

|

1 2 |

echo s > /proc/sysrq-trigger # Sync all mounted filesystems to disk echo b > /proc/sysrq-trigger # Trigger hard-reset |

Well, yes, that’s better, because it flushes all the buffers to disk before pulling the cord. The chance of losing or corrupting data is very slim with this one. It’s still pretty nuclear if you ask me, and doesn’t make me feel warm and fuzzy inside. And as it turns out, echo s can hang indefinitely (much like sync) if the hardware doesn’t respond — the infamous uninterruptible sleep (D) strikes again. (Side note: I am far from an expert in this field, but the amount of times TASK_UNINTERRUPTIBLE has bitten us left and right leaves me to believe that there is something wrong with having a state like that in the kernel at all. That’s a story for another time, though.)

So because echo s can hang, we briefly experimented with something like this:

|

1 2 |

timeout 10s bash -c ‘echo s > /proc/sysrq-trigger’ # Wait max 10s for sync echo b > /proc/sysrq-trigger |

We tested this for a while, but saw enough corrupt file systems (despite the echo s) that it scared us away from this approach. It also felt wrong to punish the 98% of devices that were rebooting just fine using reboot. What we really needed was a reboot mechanism that first tried to run a proper reboot, and only triggered a hard-reset when things got stuck for too long.

We reviewed how reboot is actually implemented and then thought about implementing our own reboot mechanism with a hard-reset fallback. How hard can it be? It’s just sending SIGTERM to all processes, and then waiting a while for things to gracefully shut down before triggering a hard-reset, right?

Before you raise your eyebrows too much, I’m kidding. We realized pretty quickly that if Ubuntu can’t get it right, we’d probably have a hard time too. So on to the next idea.

3. Introducing watchdogs

The Linux kernel provides a watchdog API whose entire purpose in life is to hard-reset systems that get stuck or hang due to unrecoverable errors such as a kernel panic. Turns out that many modern CPUs and some IPMI/BMC systems ship with a hardware-level watchdog implementation that hard-resets the host system unless a heartbeat is received regularly. When a watchdog is enabled, and a system freezes or otherwise hangs so that it cannot send a heartbeat, the watchdog resets the computer.

This is exactly what we needed!

Even though watchdogs certainly aren’t meant to be used to ensure a proper reboot, they absolutely can be used for what we need: enable a hardware-level watchdog that hard resets the machine in case the soft reboot via reboot fails.

There are multiple watchdog implementations, each of which comes with its own quirks. We have found the Intel CPU-based iTCO watchdog to be the most reliable, so we use that as a default. Unfortunately, some motherboard vendors disable this functionality in hardware. In that case, and on non-Intel devices, we have two fallback options:

- IPMI/BMC based watchdog: aside from the iTCO watchdog, we have found the ipmi_watchdog kernel module to be very reliable. Since it’s IPMI-based, you obviously need to have a BMC that supports it and make sure that it actually works. We have found some mainboards have faulty watchdogs, so beware of that and do extensive testing before using it.

- Software-based watchdog: the kernel provides a softdog module, which can be used if no hardware supported watchdog is available. The softdog is obviously not as reliable because it relies on the kernel to not have crashed entirely. It’s better than nothing though, that’s for sure.

The watchdog driver provides a /dev/watchdog device that needs to be written to every X seconds. If nothing writes to it until it times out, the system is hard reset. Usually some process holds a file handle open and regularly writes to it to pet the watchdog (there is a watchdog daemon for this purpose). In our case, we simply want to ensure that a hard reset is triggered after X seconds, so all we do is pet the watchdog once (open file handle, and close it) before calling reboot.

The timeout is specified via the heartbeat=.. (iTCO), timeout= (IPMI) or soft_margin=.. (softdog) parameters when loading the module via modprobe. The nowayout=1 parameter is necessary to ensure that the watchdog timer cannot be stopped by any means once it is started.

4. Reliably rebooting with watchdogs

In short, here’s what we do in our BCDR devices to reliably reboot them as part of the OS upgrade:

- Load watchdog kernel module with a 10-minute timeout

- Access the watchdog device to start the heartbeat timer

- Sync, sync, sync the root partition (I know we don’t have to …)

- Reboot

If any of the steps after loading the watchdog module hangs or fails, the watchdog will trigger a hard-reset after 10 minutes. The actual code we use to execute these steps is part of our image-based upgrade tool upgradectl. Here’s a shorter version in Bash that is obviously not as well-tested, but it should work:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

#!/bin/bash WATCHDOGS=( "iTCO_wdt nowayout=1 heartbeat=600" "ipmi_watchdog nowayout=1 action=reset timeout=600" "softdog nowayout=1 soft_margin=600" ) BAD_BOARDS=( "A1SRi" "X9DRD-7LN4F" "X9DBL-3F/X9DBL-iF" "X9SRE/X9SRE-3F/X9SRi/X9SRi-3F" "X10SLH-F/X10SLM+F" "X10SLH-F/X10SLM+-F" "X10SLM-F" "X11SSH-F" "DCS" "0PM2CW" ) board=$(dmidecode -s baseboard-product-name | head -n 1) for watchdog in "${WATCHDOGS[@]}"; do if [[ $watchdog =~ "ipmi" && " ${BAD_BOARDS[@]} " =~ " ${board} " ]]; then echo "Skipping IPMI watchdog due to bad board $board" continue fi echo "Attempting to load watchdog $watchdog" modprobe $watchdog udevadm settle sleep 1 if [ -e /dev/watchdog ]; then echo "Starting watchdog timer" touch /dev/watchdog break fi done rootpart=$(awk -v needle="/" '$2==needle {print $1}' /proc/mounts | grep -v ^rootfs) echo "Syncing root partition $rootpart" for i in 1 2 3; do timeout 60s blockdev --flushbufs $rootpart; done echo "Waiting 25 seconds" sleep 25 echo "Reboot" reboot |

It’s not the prettiest script in the world, and yes, it’s got some magical sleeps in there that we would have rather avoided. But we have found that there are race conditions and slow background tasks that are difficult to pinpoint, so sleep is the next best thing. The sleep after loading the watchdog module is to ensure that the watchdog device has time to be put in place by the kernel. The 25 second sleep after the triple-sync is because we have found file corruption on the root disk in the field without it. It’s ugly, but it works.

You may have also noticed some special logic for the IPMI watchdog: as it turns out, quite a few mainboards have either bad or faulty IPMI watchdog implementations. We have found boards that would continuously reboot devices (devices were stuck in reboot loops, so much fun …), or watchdogs that simply didn’t behave like they were supposed to. For that reason we’ve chosen not to use the IPMI watchdog for these boards.

Another thing worth noting is that if you already have a watchdog daemon running, this script will likely need some changes: instead of loading the watchdog module, you may want to reconfigure it. For IPMI, you can do this by writing to /sys/module/ipmi_watchdog/parameters, and I’m sure the other modules have similar toggles.

5. Verdict

Rebooting reliably is more complicated than I would have ever imagined.

I don’t think the option we have chosen here is the best there is, but it has been working reliably for over 3 years. Our tens of thousands of appliances upgrade and reboot every 2-3 weeks, and the number of devices that don’t come back from a reboot is incredibly low — much lower than before we started using the watchdog-assisted reboots. Nowadays, if a device doesn’t come back from a reboot, it’s typically because of bad hardware (e.g., bad board or bad RAM), and not because it got stuck in the shutdown process somewhere.

So all in all, I’d say we’re pretty happy with this approach, and that extra special thrill that I was talking about when we reboot our devices has definitely gone away.

> Somehow, typing reboot always comes with that extra

> special feeling of uncertainty and the thrill of danger —

> Will it come back? Where will it get stuck this time?

Exactly the same vibe here!