The capabilities of cell phones increased dramatically in the last few years. While in the old days most mobile phones were primarily used to make phone calls, modern smart-phones are mostly all-round devices. With the possibility of accessing the Internet and the availability of various sensors (e.g. location or noise), mobile applications have become interactive and flexible. The trend towards location-based services and context-awareness allows applications to react on their surroundings and to behave intuitively towards the user.

For developers, context-awareness can be both a blessing and a curse. While the mobile operating systems iPhone OS and Android come with relatively good sensor-support, the vast majority of devices deal with Java ME’s basic and heterogeneous sensor functionalities.

The Aware Context API (ACAPI) aims to bridge this gap by providing a framework for building context aware applications for mobile devices based on Java ME. In this article, I’d like to introduce ACAPI, its structure and usage briefly. Please feel free to comment.

Contents

1. Motivation and Goals

ACAPI is designed to allow easy and homogeneous access to the different sensors of the mobile device. It creates an abstraction between the available sensors and their usage so that developers can focus on the business logic rather than on how to use the sensor implementations.

Example: A mobile application shall notify the user if another (previously defined) person comes into his or her range, e.g. if the boss arrives at the office.

Using the standard Java ME interfaces, developers have to get to know the different APIs and write a lot of code to solve this or similar problems. In this use case, the application needs to determine its position (the office), monitor the available devices around it (the phone of the boss) and be able to notify the user when both events occur.

The Aware Context API aims to solve this reoccurring problem with an easy-to-use event-based framework that allows defining rules for available sensor data. Using ACAPI, the given example can be solved easily by defining a rule and an action that is triggered when the rule matches:

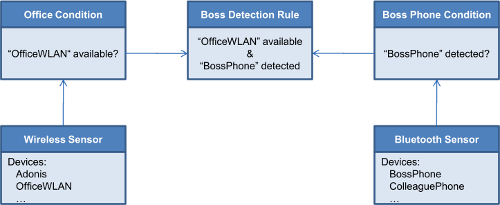

- Rule: Wi-Fi “OfficeWLAN” available AND Bluetooth device “BossPhone” available

- Action: Notify user, e.g. by playing a sound

2. Development Team and Scope

The ACAPI project was developed within the scope of a team project as part of the curriculum of a Master of Science Degree at the University of Mannheim. The project was a team-effort, carried out over a 1-year duration at the Chair of Business Administration and Information Systems, under the supervision of Prof. Armin Heinzl and his research assistants Erik Hemmer and Sebastian Stuckenberg.

The project team consisted of Lars Bakker, Philipp Heckel (myself), Obie Modisane, Benjamin Schubert and Moritz Wächter.

3. Aware Context API (ACAPI)

The Aware Context API is well-structured and is very easy to understand. It is easily extendible and supports a broad range of devices. It is mainly based on Java ME, but has native parts whenever needed (e.g. for Wi-Fi, battery or telephony).

3.1. ACAPI Structure

ACAPI is horizontally structured into 3 different layers:

- Sensor: On the lowest level, the sensors gather data about the current status and context of the phone. A Wi-Fi sensor, for instance, collects available devices and it issues an event whenever the data changes. Applications can either hook themselves directly into the sensor events or use higher abstractions (conditions and rules).

- Condition: In order to evaluate a single sensor, conditions compare the sensor’s properties to given values. They can become either true or false. A location condition, for example, becomes true if the phone gets into the range of certain coordinates. Similar to a sensor, a condition issues an event when the value changes (from true to false, or vice versa).

- Rule: To express more than one condition, rules can combine conditions to a more complex logical expression. In the above example, the rule only matches if both conditions match (“in the office” and “boss phone available”).

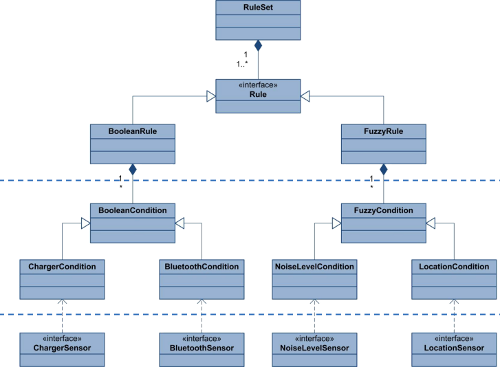

ACAPI Structure (simplified and incomplete!): The Aware Context API is layer-based. Each of the components is easily extendible and has event listeners to react on changes. This chart shows the interdependence of the different layers.

Using this layered structure, ACAPI fundamentally changes the development strategy of mobile applications. Instead of predefining a screen and/or process flow, applications are event-driven. Whenever a rule changes its state (match vs. no match), the application can react by displaying a different screen, notifying the user, or by performing other actions.

Besides the horizontal division, the API is also vertically divided in the two logical parts Boolean and Fuzzy. While the Boolean part assumes correct sensors, the Fuzzy conditions and rules take measurement errors and inaccuracy into account. While the Boolean conditions and rules can only become true or false, their Fuzzy counterparts implement a score-based system that only triggers when a certain threshold is reached. This is particularly relevant for sensors that supply accuracy data, e.g. GPS sensors.

3.2. Implemented Sensors

The current code base of ACAPI includes several predefined sensors, including the most common: Bluetooth and GPS. Most sensors are entirely based on Java ME and will work on any phone that supports the corresponding JSR. However, since Java ME does not provide access to some functionalities, a few native implementations are required (e.g. for Wi-Fi, battery status or telephony status).

The following sensors are already implemented:

- Battery Sensor (native S60): This sensor monitors the status of the battery (%) and the charger (enum value, e.g. on-battery, or plugged-in). There is currently only a Symbian S60 implementation for this sensor since Java ME does not allow access to the battery data.

- Bluetooth Sensor: This sensor monitors available devices, e.g. phones or laptops. It can react on joining or leaving devices.

- Custom Sensor: This sensor allows the integration of business logic in ACAPI, so that rules do not only include actual sensor data, but also virtual business sensor data.

- Location Sensor (GPS and Wi-Fi; partially native S60): This sensor monitors the position and the speed. It uses GPS and Wi-Fi triangulation to get a fast and accurate position. Since Java ME does not allow access to the wireless sensor, the Wi-Fi part is native S60 code.

- Noise Level Sensor: This sensor monitors the noise level (in decibels) of the surrounding area.

- Time Sensor: This sensor delivers the current time and can react on date and time changes.

- Wireless Sensor (native S60): This sensor monitors the available Wi-Fi devices, i.e. access points. It can react on joining and leaving devices. In combination with a web service, it can be used to estimate the position.

There are many other possible sensors that could be implemented using the available abstract classes. Examples include an orientation sensor (react on device movement) or a telephony sensor (react on calls, SMS etc.).

4. Example Usage

Having discussed the structure of the Aware Context API, the following section elaborates the above-mentioned example even further. It explains the scenario and shows specific example code.

4.1. Example Scenario and Code

As already briefly mentioned above, the example scenario for demonstrating the API is very simple: The application shall display a warning message and play a warning sound when the boss arrives at the office.

The two conditions depicted in the diagram above are combined in one Boolean rule, i.e. the rule only becomes true if both of the conditions match.

Similar to the API concept, its actual usage is also very simple. The following code snippet shows how to implement the above example in a regular Java ME application.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

// Conditions Condition inOffice = new WirelessNearCondition("OfficeWLAN"); Condition bossPhone = new BluetoothNearCondition("BossPhone"); // The two conditions create one rule Rule bossDetectionRule = new BooleanRule(); bossDetectionRule.addCondition(inOffice); bossDetectionRule.addCondition(bossPhone); // React when the rule matches bossDetectionRule.addRuleListener(new RuleListener() { public void ruleChanged(Rule rule, boolean matches) { if (matches) { playWarningSound(); displayWarningMessage("Warning: boss has arrived!"); } }}); // Activate rule bossDetectionRule.setActive(true); |

In lines 2-3, the specific conditions are created. Since both conditions represent very generic cases (Bluetooth/WLAN device in range), ACAPI provides predefined conditions for them. In cases where more complex tests are desired, conditions can be extended via simple Java inheritance. Lines 6-8 combine the two conditions to a single Boolean rule, i.e. a rule that becomes either true or false depending on the status of its conditions. Since the application is supposed to react on changes in this particular rule, it registers itself as a listener in lines 11-17. When the rule is activated (line 20), it tells its conditions to register themselves at the corresponding sensors, which in turn get activated (if not already running). After this initialization, ACAPI notifies all registered listeners whenever the rule changes.

Depending on the status of the rules and conditions, i.e. on the device context, the application can change its appearance, behavior or internal state. In this case, it only plays a warning sound and displays a warning message (lines 14-15).

4.2. Proof-of-Concept Application

In order to test the implemented sensors and the rules engine of ACAPI, we developed a proof-of-concept application that implements a more sophisticated context driven use case.

A field service automation application that reacts upon the context the worker is in at the moment. This can be nicely done with the ACAPI and has a value for businesses. However, as this part of our project is not open source, I will not go into more detail here.

5. Future Work and Conclusion

The Aware Context API provides a framework for building context-aware applications for mobile devices based on Java ME. By providing uniform interfaces to different sensors, the library allows the development of context-driven applications.

The idea and structure of ACAPI are very solid, however, the actual implementation is in a very early development stage. While most sensors and Boolean conditions/rules are already working on the test devices, the Fuzzy conditions and rules are yet to be implemented. The native part only covers Symbian S60 so far and lacks of stability. Hence, the future work will include the implementation of missing parts, testing as well as the documentation.

A. Download and License

ACAPI will be released as open source, possibly under GPL or a Creative Commons license. Since we have not finished cleaning up the code and commenting everything, the code is not available for download as yet.

However, since it will be open source anyway, I will give out the code upon request.

Hi Philipp,

As a group os students, we are working on mobile context api and database. We are using db4o for saving the context info.can you please provide us the code so that we can enhance it as per our project domain..All we nedd to do is create the API for context and we need to store the context in db. If this quick start is available we can work out on remaining aspects. I hope you get my point.

thanks and regards,

sairam ghanta

Hey Sairam,

I am travelling right now. I will send you everything as soon as I get back home.

Regards,

Philipp

hej phillip,

Thanks for your response. I’m eagerly waiting for your further reply.

thanks and regards,

sairam ghanta

hej phillip,

when u will be free?. i want to talk with u regarding context aware applications. please pm me when ever you r free..

thanks and regards,

sairam ghanta

Hi Phillip,

You have explained very nicely, i thnk by this time you finished it. We are implementing Context Aware API for android based mobiles. After reading your material i got some idea..how to do it? But, as u took an example of notifyng boss arrival or availability…how was it done?

Can you give us brief idea and can you share your code with us?

Thanks,

Praveen..

Hello Praveen,

I would not exactly say it’s “finished”, but I do have a piece of code that is (or was) working okay on Symbian. Now Symbian is of course not exactly state-of-the-art anymore, but you could more or less easily port it to Android. I think the API is pretty good, many of the device/platform-specific sensor stuff has to be reimplemented, though.

Here is the code as of 2010. The first package is the Java-based API, the second archive is the S60 native C code. Some of the sensors in the “impl.nokia”-package communicate with the native code via plain sockets. I think it’s a good start if you want to do something similar.

If you use the code, please let me know for what — just out of interest:

http://blog.philippheckel.com/uploads/2010/08/acapi.tar.gz

http://blog.philippheckel.com/uploads/2010/08/acapi-s60.tar.gz

Best regards,

Philipp