For my work, I work a lot with Elasticsearch. Elasticsearch is pretty famous by now, so I doubt that it needs an introduction. But if you happen to not know what it is: it’s a document store with unique search capabilities, and incredible scalability.

Despite its incredible features though, it has its rough edges. And no, I don’t mean the horrific query language (honestly, who thought that was a good idea?). I mean the fact that without external tools it’s quite impossible to import, export, copy, move or re-shard an Elasticsearch index. Indices are very final, unfortunately.

This is quite often very inconvenient if you have a growing index for which each Elasticsearch shard is outgrowing its recommended size (2 billion documents). Or even if you have the opposite problem: if you have an ES cluster that has too many shards (~800 shards per host is the recommendation I think), because you have too many indices.

This is why I wrote elastictl: elastictl is a simple tool to import/export Elasticsearch indices into a file, and/or reshard an index. In this short post, I’ll show a few examples of how it can be used.

Usage

elastictl can be used for:

- Backup/restore of an Elasticsearch index

- Performance test an Elasticsearch cluster (import with high concurrency, see --workers)

- Change the shard/replica count of an index (see elastictl reshard command)

It’s a tiny utility, so don’t expect too much, but it’s helped our work quite a bit. It allows you to easily copy an index, or move it, or test the index concurrency supported by your cluster. In my local cluster, I was able to import ~10k documents per second.

Here’s a short usage overview:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

$ elastictl NAME: elastictl - Elasticsearch toolkit USAGE: elastictl COMMAND [OPTION..] [ARG..] COMMANDS: export, e Export an entire index to STDOUT import, i Write to ES index from STDIN reshard, r Reshard index using different shard/replica counts Try 'elastictl COMMAND --help' for more information. elastictl 0.0.5 (e645803), runtime go1.16, built at 2021-04-14T15:05:42Z Copyright (C) 2021 Philipp C. Heckel, distributed under the Apache License 2.0 |

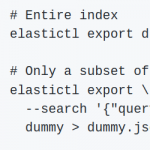

Export/dump an index to a file

To back up an index into a file, including its mapping and all the documents, you can use the elastictl export command. It will write out JSON to STDOUT. The file format is pretty simple: the first line of the output format is the mapping, the rest are the documents. You can even export only a subset of an index using the --search/-q option (that is, if you can master the query language).

|

1 2 3 4 5 6 7 8 |

# Entire index (assumes that ES is running at localhost:9200) elastictl export my-index | gzip > my-index.json.gz # Only a subset of documents elastictl export \ --host 10.0.1.2:9200 \ --search '{"query":{"bool":{"must_not":{"match":{"eventType":"Success"}}}}}' \ my-index > my-index-no-successes.json |

If you’re wondering “isn’t this just like elasticdump“? The answer is yes and no. I naturally tried elasticdump first, but it didn’t really work for me: I had issues installing it via npm, and it was quite frankly rather slow. elasticdump also doesn’t support resharding, though it has many other cool features.

Import to new index

The elastictl import command will read from STDIN and write a previously exported file to a new or existing index with configurable concurrency. Using a high number of --workers, you can really hammer the ES cluster. It’s actually quite easy to make even large clusters fall over like this (assuming of course that you’re pointing --host to a load balancer):

|

1 2 3 4 5 |

# With high concurrency zcat my-index.json.gz | elastictl import --workers 100 my-index-copy # Just copy and index elastictl export my-index | elastictl import my-index-copy2 |

There are other options you can pass to the elastictl import command to modify the mapping slightly (mostly the number of replicas and the number of shards):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

$ elastictl import --help NAME: elastictl import - Write to ES index from STDIN USAGE: elastictl import INDEX OPTIONS: --host value, -H value override default host (default: localhost:9200) --workers value, -w value number of concurrent workers (default: 50) --shards value, -s value override the number of shards on index creation (default: no change) --replicas value, -r value override the number of replicas on index creation (default: no change) --no-create, -N do not create index (default: false) --help, -h show help (default: false) |

Re-shard an index

The elastictl reshard command is a combination of the two above commands: it first exports an index into a file and then re-imports it with a different number of shards and/or replicas.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# Set number of shards of the "my-index" index to 10 and the number of replicas to 1 elastictl reshard \ --shards 10 \ --replicas 1 \ my-index # Export a subset of the "my-index" index and re-import it with a smaller number of shards/replicas elastictl reshard \ --search '{"query":{"bool":{"must_not":{"match":{"eventType":"Success"}}}}}' \ --shards 1 \ --replicas 1 \ my-index |

Note: Similar to the _reindex API in Elasticsearch, this command should be used while the index is not being written to, because documents coming in after the command was kicked off will otherwise be lost. Please also note that the command does DELETE the index after exporting it. A copy will be available on disk though.

Feedback is welcome

elastictl is a tiny little tool, and I’m sure there are others that do a similar job. The tool is open source and available under the Apache 2.0 license, so please feel free to send contributions via pull a request on GitHub.

Good post

After this got stuck in FileBeat 401 unauthorization with aws Elasticsearch.

Got help from

https://learningsubway.com/filebeat-401-unauthorized-error-with-aws-elasticsearch/

As far as I can see, elastictl splits the docs for reimport and creates a single HTTP Request per Document.

Will you implement this as bulk, too? I’d guess, if you do so, you’ll easily exceed 10K Docs/s.

With these lines, I’m able to index ca. 10k Docs/s, but now the single python process is the bottleneck running at 100% CPU. I’m feeding it with a raw dump, which gets decompressed in on the fly by zstd.

But I think with go, this would not be any issue to make zstd run at 100% CPU ;-)

#!/usr/bin/env python3

from collections import deque

from elasticsearch import Elasticsearch

from elasticsearch import helpers

import jsonlines

import sys

es = Elasticsearch("")

pb = helpers.parallel_bulk(es, actions=jsonlines.Reader(sys.stdin), chunk_size=5000, thread_count=8)

deque(pb, maxlen=0)